.png&h=570&w=855&q=100&v=20250320&c=1)

It's undeniable by now that the advent of artificial intelligence (AI), particularly generative, is revolutionising the corporate landscape. The media industry is no exception to this disruption. Be it large conglomerates or small shops, news organisations are quickly finding themselves at the forefront of an avalanche of transformation, leveraging AI to enhance content creation, distribution, and consumer engagement. The integration of artificial intelligence is not only streamlining operations but also opening up undiscovered avenues for delivering news, tailoring it to be more relevant and accessible to today's digital audiences.

With this shift comes the critical challenge of managing copyright, data, and intellectual property (IP). While regulation serves as an essential mechanism in protecting the creative output of news organisations and ensuring their journalistic efforts are shielded from unauthorised uses, the pace of technological advancement has far outstripped the evolution of regulatory frameworks. The challenge lies not only in securing the unique assets that news organisations generate against duplication, misuse and infringement but also in doing so in a way that maintains the sanctity and economic viability of the organisation and, more widely, the integrity of the industry.

For Dow Jones—one of the world's largest business and financial news corporations—this security is paramount. The News Corp subsidiary is actively exploring strategies to address the challenges and opportunities presented by AI—particularly in the context of copyright. And whilst others including The New York Times have opted for the litigious route, global chief executive for NewsCorp, Robert Thomson, has underscored a preference for negotiation over legal proceedings with AI companies to secure agreements for content usage.

“Those crucial negotiations are at an advanced stage,” Thomson shared at the company’s quarterly earnings briefing earlier this month. "We are hopeful that again News Corp will be able to set meaningful global precedents with digital companies that will assist journalists and journalism, and ensure that [generative AI] is not fuelled by digital dross.”

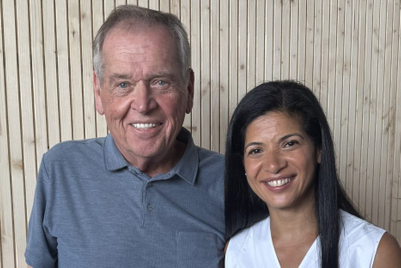

This stance of seeking "courtship rather than courtrooms" is one Ingrid Verschuren, global head of Data Strategy and AI for Dow Jones aligns with. Having spent over two decades at the corporation, she's witnessed firsthand the evolution of the role of data within the news and financial contexts, the pervasive immersion of all-things digital and now, the influx that is AI.

Scroll on to read the highlights of Campaign's discussion with Verschuren on all the above and more, during her recent visit to Singapore.

Ingrid, you’ve been working in the data field for over 25 years, 24 of which you’ve spent at Dow Jones. In your expert opinion, is artificial intelligence as big of an emerging hype to you as it seems to be for the rest of the world?

I’m not entirely convinced it is—especially if you look back at how long Dow Jones has [already] been using AI. Also, [what’s] the definition of AI, and what exactly do we include in it? If you think about the fact that 20 years ago, we'd already started using Natural Language Processing (NLP), which is a more rudimentary form of what we currently have. We started using it for applying tagging to news articles that effectively go into our news database, and we process around 600,000 articles a day.

I’ve been here a long time. I was hired to tag news articles manually years ago, when the news volume was much lower. There's no way you could do that today with humans! Since, we’ve had to think about how we can do this now in a more efficient and automated manner. That's how long we've been using AI. And it has evolved over time; we've used it in different iterations. There was a conversation that occurred between the Microsoft CEO [Satya Nadella] and OpenAI CEO [Sam Altman] at Davos [this year], and Altman said [when speaking of AI] that it’s going to be an evolution, not a revolution. It's not going to be this big explosion that everybody thought it’s going to be. I think that’s interesting, and it’s what we’re seeing in the industry now.

As you mentioned, Dow Jones and other such multinational and large-scale companies have already been using AI for years—be it for supply chain, logistics or customer insights. Ironically, it’s media organisations that seem to have created the newfound chatter around the boom of customer-facing Gen AI. So, how do you tackle that misconception?

I think the biggest risk is misinformation. It’s misinformation or it's fake news or anything that is inaccurate [that is causing this]. So, a big piece of ensuring this misconception doesn’t grow is working closely with platforms that use content. It’s ensuring that the content is right, accurate and of high quality. And linked to that is—you have to pay for that. You can't just use it and not pay for it. Because in order for us to create high-quality and accurate journalism, it's a personal risk, but it's also expensive. We want to continue making sure we produce great content, because no matter what the machine does, you have to feed it with accurate information. So, I think that is one piece on how you can help mitigate the misinformation.

The second piece is news literacy. You have to continuously educate people, ensuring that they understand where news is coming from, what news actually means, and what really good journalism means as well. And then I think thirdly, it's critical to ensure that there is always a human in the loop. Yes, AI can help augment processes. But it is still super important that there are humans who oversee it and verify what is being done.

You’ve touched on an important point: Paywalls and access to content, both hot-button topics in recent times. There’s a lot of criticism that if good, high-quality content is hidden behind a paywall, then it simply becomes another way for privileged individuals with a higher socioeconomic status to benefit. In reality, those not from this cohort are the most susceptible to misinformation due to a lack of access to resources. What are your thoughts on this?

I think it's a mixture of both. Let's use Gen AI as one example [even though it’s not the only one]. Even if you think about how some of these platforms have trained their models, it's been a combination of information. Some of it is definitely free, and some of it is open source. But some of the information was paid for, and it was created by agreeing that actually you had to pay for it. So, it’s about being very transparent about what information actually goes into those models and what information comes out. If, ultimately, a platform decides that they want to offer some of that for free [and some not], I think you’ve still played a role in ensuring that people have accurate information. [Because] if the starting point isn't accurate information, then everything else is lost. I still believe that news literacy can help by continuously educating people that [you] don't trust everything you see, and you should verify it as many ways as you can. Will it be challenging? Absolutely. So, if you can do that through paid information, that is great, because it provides you with a greater guarantee that the information is accurate. But there is also media out there that is free, and it is super accurate as well.

*Note: An interesting point was also raised in the room here, about how people have always paid for news by virtue of buying a newspaper even back when print was the primary medium, so why is the digital exchange different.

Speaking of AI datasets, different news organisations are handling data scraping by the likes of ChatGPT and other such models in varying ways. Whilst some are looking to litigate such as The New York Times, others are trying to strike a deal with generative AI platforms to partner up on content. What is the Dow Jones stance on this?

It is interesting. From day one, Robert Thomson [News Corp CEO] has been very vocal about the fact that it [the content] needs to be licensed. It needs to be paid for. And it needs to be paid for both within a training model, and for when the information comes out as well. Those are the two pieces, I think, so that's definitely what we are focusing on. Having said that, we also really, really want to protect copyright. So, if there are instances where copyright is being disregarded, we also want to make sure that we deal with that.

As an international organisation, how does Dow Jones universally regulate concerns around data and privacy, given each market you’re in will have different constrictions and perspectives on how to access and utilise data?

Our view is that we typically align with the strongest data privacy regulations across various jurisdictions. This is crucial in protecting us because, in essence, we aim to ensure that ethical content is used, safeguarding us against potential risks. What's intriguing in the broader AI discourse is the reflection on our existing processes in news production. Presently, we adhere to a set of principles governed by an Ethics and Standards Committee. Journalists employing sources must undergo thorough vetting, often double or triple vetted. Although the methods of generating content may vary, these fundamental principles persist. This consistency extends across different business units within Dow Jones, not only in news, but also in areas like our Risk and Compliance business, addressing concerns such as anti-money laundering, anti-bribery and corruption, where the standards remain identical.

Are you deploying AI across the board then, including in operational functions such as HR or finance?

Yes. So, our biggest concern right now is protecting our content. Then secondly, protecting the content from the publishers that we work with. And then thirdly, we are looking at how we can employ AI mostly to augment existing processes—whether that is in a newsroom or in other businesses within Dow Jones, or even just making internal processes more efficient. So that might be in finance or that might be in HR. And again, if you think about not saying 'AI', but using the word 'automation', then we've been using that for a very long time. And sometimes that involves AI and other times, automation might be very simplistic. So, we look at the problem, and then we try to find what is the right solution for it.

Can you tell us a little more about the newsroom application of AI? Are you using it to generate content?

The focus within the newsroom is really about augmenting it with AI. Rather than replacing journalists and journalism, we want to make absolutely sure that we have journalists who are ultimately responsible for the story. And I don't think that will change anytime soon. But that doesn't mean that the newsroom hasn't been using AI, but it may not necessarily be to generate stories. One of the interesting examples I can share: A while ago, we did a story on TikTok and trying to figure out how the algorithm actually works. In order to do that, we needed to create around 100 TikTok accounts, and they needed to be monitored 24/7. There's no way a human could have done that, but a machine can do it. Once we get all that information back, we can then analyse it. So, that’s a good example of how the newsroom is using it.

The other aspect we've been engaged in for quite some time pertains more to the news writing business too, which focuses on financial news—particularly within the wealth and investment segment. Many of the stories they report on include earnings updates and Real Estate Investment Trusts (REITs) reporting on the market, and more. Interestingly, through straightforward formulaic data comparisons, a narrative can be generated that presents the top five winners and the bottom five performers of the day. We consistently apply this approach, known as automated insights. Most importantly, the story concludes with a clear label indicating that the content has been created automatically. This emphasis on transparency and trust and its interconnected principles, ensures that we never present an automatically generated story as if it were crafted by us.

What are some unique challenges you think news organisations face with AI that others may not be encountering?

I think one of the key aspects is that, when considering this journey, there are businesses that primarily focus on the consumer side and others that concentrate on the B2B or professional side. I think the fact that we have the Wall Street Journal, Barron’s, MarketWatch and the other news publications, that is what we care about the most: Protecting our copyright. However, if you look at some of the B2B competitors, they might not necessarily be publishers as well. That’s not to say they may not care about that, but it may not be their top priority. So, I think that is one of the things that sets us apart. It also actually helps us, because ultimately, it means that if we want to develop solutions, we know that we own really good content in order to do that. It's a benefit to us from that perspective as well.

AI platforms are still very much in their infancy stages, and whilst OpenAI is leading the pack today, there may still be a host of other smaller players that crop up and start accessing your content. This may even just be through buying a paid subscription. How do you mitigate the risk of them taking your data, given you’ll most likely be striking copyright licensing deals with larger players as a priority?

That’s a good question, but ultimately, it’s still a clear violation of copyright, right? We’re very vigilant about protecting our content—which means that if we see people reproducing our content somewhere else for a commercial gain behind it, we will take action. The base principle isn’t necessarily different from how we would handle copyright in any other circumstance, as it is with AI. We want our content to be disseminated and distributed to as many people as possible, but we want to make sure that happens in a fair way, and that is always what our starting point is. That is always the first conversation we will have.

Finally, are you making any dedicated investments to upskilling your workforce when it comes to AI?

Yes. So, one element is understanding the technical skills and aspects, and the other is the processes, so it’s important to understand the required landscape. We have dedicated learning sessions that are available to all employees. They can do a deeper dive on specific use cases, and that really helps. Then, they can always speak to the more technical people to find out how we are exactly deploying AI, but I think the educational piece is incredibly important, and we are definitely committed to it.

.jpg&h=334&w=500&q=100&v=20250320&c=1)

.png&h=334&w=500&q=100&v=20250320&c=1)

.png&h=334&w=500&q=100&v=20250320&c=1)

.jpg&h=268&w=401&q=100&v=20250320&c=1)

.jpg&h=268&w=401&q=100&v=20250320&c=1)

.jpg&h=268&w=401&q=100&v=20250320&c=1)

.png&h=268&w=401&q=100&v=20250320&c=1)